How do we use evaluations of a journey to avoid copy and paste from AI.

Most data that is captured digitally comes from a global north context. This means that any output will come from a global north context.

How do students who are learning new content evaluate the truth statements made by AI models if they do not already have a background in that field.

Campus food survey used AI to create an image.

Notebook LM, an AI that allows you to select the sources on which the AI creates content.

Having premium AI options, does this create a divide between students who can afford and who cannot afford the “better” program.

How is OpenAI scraping the internet different from someone observing all of the internet and sharing it with you?

How will fees on running queries change as the environmental and social impacts of AI increase in relevance and impact?

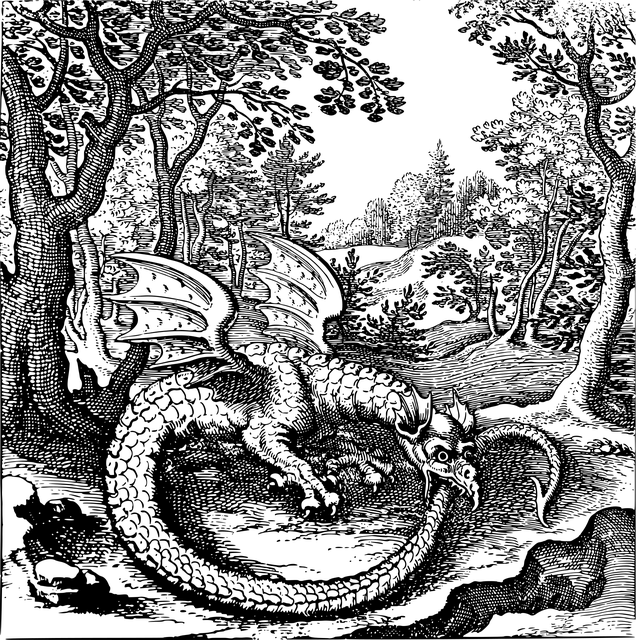

Our class conversations about AI self-cannibalization reminded me of the Ouroboros symbol which I had always interpreted as a symbol of greed and self-defeat. However, it is discussed in reference to cyclical structures, where the creature develops out of itself, while also consuming itself.

This perspective had me reflect on why we considered this form of self-consumption a negative idea, while other cultures considered the Ouroboros a positive. Examples of this are seen in the cyclical nature of the Nile river banks which the ancient Egyptians used as their year marker. This did include construction and destruction in cycles, within it, but it was overall seen as a positive. We seem to only consider content created by AI as useful if it is consumed by humans and wasteful if AI is trained on it. This hints at how we value content created by humans compared to AI. When a chatbot hallucinates, we dismiss this response as incorrect, whereas when a human creates a new idea, we weigh its value and logical structure before deciding if it is valuable. This reminds me of how we teach humans compared to teaching adults. Children are told to rehearse thoughts and ideas, and when they say something “wrong” they’re often told that they are wrong and to go back to rehearsing. Comparing this to adults, we often ask how they came to that conclusion and try to identify the incorrect reasoning. So we seem to treat children and AI in similar manners, where they are dismissed and ignored when incorrect, but celebrated when they are correct.